In this post we will develop a Deep Learning Neural Network Trading System using Tensorflow. What we will do is we will develop a classifier that will tell us whether the next candle will be bullish or bearish. We will be able to use this model for any instrument and any timeframe. Once we have that model we will backtest it and see what is the accuracy. This is an educational post. So don’t try to trade with this model. When we open a trade we need to take care of risk meaning we need to know where to put the stop loss. Read the post on Forex Neural Network Trading.

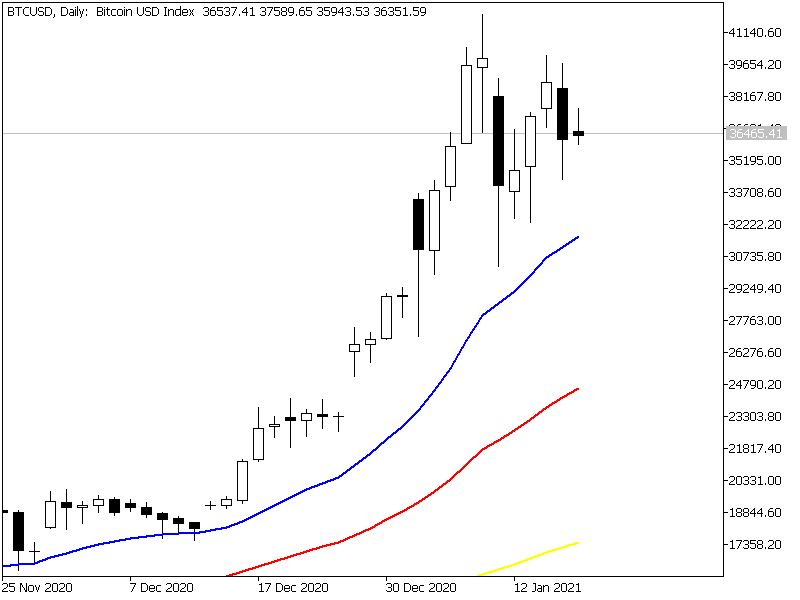

Bitcoin has seen a lot of volatility. It saw a high of $61K then fell down below $35K in the coming months. If I did not buy bitcoin when it was trading at $1 per bitcoin. Why? In those bitcoin was unknown generally and whatever publicity it got was very negative. Most of the media was reporting that bitcoin is being used by the criminals, mafia and drug gangs. Now those days are long gone. But you can still profit from trading the daily bitcoin volatility.

Why We Need To Predict The Next Candle?

Imagine if you could predict Bitcoin daily candle with a high degree of accuracy. Will it make you rich? I don’t know but we can build a deep learning neural network trading system that does precisely that in this post. We measure the accuracy of this deep learning neural network trading system and if we get high accuracy we can think about trading live with it. So stay tuned and read till the end in order to discover the accuracy of this deep learning neural network trading system and whether we can trade live with it or not.

Predicting the next candle can help like this. Suppose we see a good short trade setup using chart analysis and technical analysis. You are using indicators like MACD, RSI, Stochastic and you have got a nice bearish divergence pattern. You are all set to open a trade with 100 lots. Oops! Will you do it? Do you need more confirmation. Suppose your neural network has got 60% accuracy and it predicts that the next daily candle is bullish indicating that the stop loss will get hit and you will lost 100 lot trade meaning lose big time. You avoid the trade and only take those trades that have confirm with the direction of the next candle.

Most of these deep learning neural network models give good accuracy on training but give poor performance on unseen data. What we need is a deep learning trading algorithm that predicts the unseen data with high accuracy like 80-90%. Can we build such a deep learning trading algorithm? We will try to do that in this post. This is a crude deep learning model that we are going to build and then backtest. Read till the end of the post to discover what is the accuracy of this deep learning neural network.. The purpose is to make you understand how to build these models. Our subsequent models should provide good accuracy. The problem with financial markets is that they are highly unpredictable. Every moment some exogenous variable is interacting with the financial market pricing system and we have a chaotic system. Read the post on high frequency trend following system.

Let’s start with defining a function that will download live data from MT5. You will be happy to know that now we can easily connect python with MetaTrader5. Finally MetaQuotes Corporation that makes and sells MetaTrader5 decided to provide an api on pypi that we can install and connect python with MT5. Just use the command pip install MetaTrader5 and that api will be installed. Now you can use it to download live data any timeframe any currency pair and any stock.

from datetime import datetime

import time

import MetaTrader5 as mt5

import pandas as pd

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from keras.layers import Dense, LSTM

from tensorflow.keras.optimizers import Adam, RMSprop

#download live data from MT5

def rates(currency_pair, time_frame, bars):

#establish connection to MetaTrader 5 terminal

if not mt5.initialize():

print("initialize() failed, error code =",mt5.last_error())

quit()

# get 100 bars from the required timeframe

rates = mt5.copy_rates_from_pos(currency_pair, time_frame, 0, bars)

# shut down connection to the MetaTrader 5 terminal

mt5.shutdown()

# create DataFrame out of the obtained data

rates_frame = pd.DataFrame(rates)

return rates_frame

# df=rates("GBPUSD", mt5.TIMEFRAME_H6, 100)

# df.tail()

# df['time']=pd.to_datetime(df['time'], unit='s')Now that we have downloaded the data from MetaTrader5 we can use that data in our Deep Neural Network. My idea is to develop a deep neural network that just predicts whether the next candle will be bullish or bearish. Read the post on GBPUSD opens with a huge 200 pips gap.

What Is Tensorflow?

Tensorflow is a powerful open source machine learning framework developed by Google. Yes, it is open source you can install it using pip install tensorflow. It also has got a gpu version which I have not installed. Tensorflow gpu is more fast as compared to simpler version. Before you start using tensorflow you should learn it. Learning it will take sometime. Let’s define the Deep Neural Network for predicting the next candle:

def deep_neural_network(currency_pair, time_frame, bars):

df=rates(currency_pair, time_frame, bars)

# convert time in seconds into the datetime format

df['time']=pd.to_datetime(df['time'], unit='s')

df['return']=np.log(df['close']/df['close'].shift(1))

df['direction']=np.where(df['return'] > 0, 1, 0)

lags=5

cols=[]

data=pd.DataFrame()

data['price']=df.close

data['return']=df['return']

data['direction']=df['direction'].shift(-1)

for lag in range(1, lags+1):

col=f'lag_{lag}'

data[col]=data['return'].shift(lag)

cols.append(col)

#Adding Features

data['momentum']=data['return'].rolling(5).mean()

data['volatility']=data['return'].rolling(20).std()

data['distance']=(data['price']-data['price'].rolling(50).mean().shift(1))

data.dropna(inplace=True)

cols.extend(['return','momentum','volatility','distance'])

optimizer=Adam(learning_rate=0.0001)

model = Sequential()

model.add(Dense(32, activation='relu', input_shape=(len(cols),)))

model.add(Dense(32, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(optimizer=optimizer, loss='binary_crossentropy', metrics=['accuracy'])

#explore the data

training_data=data.iloc[:-2]

mu, std=training_data.mean(), training_data.std()

training_data_=(training_data-mu)/std

test_data=data.iloc[-2:]

test_data_=(test_data-mu)/std

model.fit(training_data_[cols], training_data['direction'], epochs=100)

pred=np.where(model.predict(test_data_[cols]) > 0.5, 1, 0)

return pred[0][0]How good is the above Deep Neural Network Trading System? We have no idea at this moment. Sequential() means we have a neural network model build in layers. Dense is just a layer that matrix multiplies the weights with the input. Want to master deep learning neural networks? You will have to learn and master matrices. Matrices are the backbone of machine learning and deep learning models.

Backtesting Tensorflow Deep Learning Neural Network Trading System

How are we going to know the accuracy of this Deep Neural Network Trading System? We need to backtest this system. We first download daily data from MT4 in a csv. Below I have provided a function that will read this csv file into a pandas dataframe. Read the post on how to predict weekly gold prices with kernel ridge regression. Now we need to backtest this Deep Neural Network Trading Algorithmic:

#read data from a csv file

def get_data(currency_pair, timeframe):

link='D:/Shared/MarketData/csv/{}{}.csv'.format(currency_pair,\

timeframe)

data = pd.read_csv(link, header=None)

data.columns=['date', 'time', 'open', 'high', 'low',

'close', 'volume']

return data

# df = get_data('GBPUSD', 1440)

# df.head()

# df.shapeIf we use the deep_neural_network function that I have provided above, Tensorflow starts complaining after a few iterations that we are retracing a lot which is resource intensive and not good. Why are we retracing a lot? We are making the model again on each function call. So below I have made the model once and then we will use this model again and again. We don’t need to build the deep neural network model again and again. We need to define the model outside the deep_neural_network function:

def make_model():

optimizer=Adam(learning_rate=0.0001)

model = Sequential()

model.add(Dense(32, activation='relu', input_shape=(9,)))

model.add(Dense(32, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(optimizer=optimizer, loss='binary_crossentropy', metrics=['accuracy'])

return model

# model=make_model()Now we redefine the deep neural network. We pass the above model as an argument to the deep_neural_network function. This way we save the resources and avoid building the model again on each function call. We will be calling this deep_neural_network function a lot when we do backtesting:

def deep_neural_network(df, model):

df['return']=np.log(df['close']/df['close'].shift(1))

df['direction']=np.where(df['return'] > 0, 1, 0)

lags=5

cols=[]

data=pd.DataFrame()

data['price']=df.close

data['return']=df['return']

data['direction']=df['direction'].shift(-1)

for lag in range(1, lags+1):

col=f'lag_{lag}'

data[col]=data['return'].shift(lag)

cols.append(col)

#Adding Features

data['momentum']=data['return'].rolling(5).mean()

data['volatility']=data['return'].rolling(20).std()

data['distance']=(data['price']-data['price'].rolling(50).mean().shift(1))

data.dropna(inplace=True)

cols.extend(['return','momentum','volatility','distance'])

#explore the data

training_data=data.iloc[:-2]

mu, std=training_data.mean(), training_data.std()

training_data_=(training_data-mu)/std

test_data=data.iloc[-2:]

test_data_=(test_data-mu)/std

model.fit(training_data_[cols], training_data['direction'], epochs=100)

pred=np.where(model.predict(test_data_[cols]) > 0.5, 1, 0)

return pred[0][0]Let’s define the backtesting function. We read the csv file and then use the last 500 days for backtesting. We make a column direction which gives us the actual direction. Download this MT4 Camarilla pivot point indicator.We then use the above deep_neural_network function to predict the direction. In the end we make a confusion matrix:

def neural_network_backtest(currency_pair, model, timeframe):

data=get_data(currency_pair, timeframe)

if (data.shape[0] < 2000):

return

data['direction'] = np.where(np.log(data.close/data.close.shift(1)) > 0, 1, 0)

data['pred']=0

program_starts = time.time()

for x in range(500):

data.iloc[len(data)-501+x,8]=deep_neural_network(\

data.iloc[len(data)-2000+x:len(data)-500+x], model)

now = time.time()

print("The backtest took {0} minutes".format((now - program_starts)/60.0))

df_confusion = pd.crosstab(data.direction.iloc[len(data)-501:len(data)-2],\

data.pred.iloc[len(data)-501:len(data)-2])

return df_confusion

# df_confusion=neural_network_backtest("GBPUSD", 1440)Now it will take many minutes something like more than 60 minutes to backtest this Deep Neural Network Trading Algorithm. Python is the best language when it comes to building deep learning models. By providing us with the MetaTrader5 python api, MetaQuotes company has provided us with a powerful tool at our disposal. We can now build very powerful deep learning models and use them in making predictions. Know who are Interdealer Brokers.

The backtest took 28.949617040157317 minutes. We can disregard the digits after the decimal point. So it took 30 minutes for the backtest.

>>> df_confusion

pred 0 1

direction

0.0 123 117

1.0 131 129The accuracy of this model for GBPUSD daily timeframe is 50.04%. If you flip a fair coin it has got 50% probability head and 50% probability tail. Suppose we flip a coin. If we get head we will say we have a bullish candle and if we get tail we will say we will have bearish candle. So instead of this model we can also flip a coin and make a decision based on that coin whether we will have a bullish candle or a bearish candle. We need to work and make the model better. Next thing that I want to try is one hot encoding and see if we have improved performance. We also need to choose better features that have more predictive power.

We can also backtest this tensorflow deep learning neural network model for H4 timeframe. But I think we should think about building a new model with better features instead of backtesting this neural network again and again. Pandas was persistently complaining about SettingWithCopyWarning and so I decided to look into it and remove this warning. I was using the same dataframe which is not a good practice in pandas as the function arguments are just a reference to the object. There is no concept of pass by value or pass by reference in Python like that in C#. Python variable is just a pointer to that object and when we pass a variable as a function argument we are passing that pointer only. I need to correct that. I have rewritten that backtest function:

def neural_network_backtest(currency_pair, model, timeframe):

data=get_data(currency_pair, timeframe)

df=data.copy()

if (data.shape[0] < 2000):

return

data['direction'] = np.where(np.log(data.close/data.close.shift(1)) > 0, 1, 0)

data['direction']=data['direction'].shift(-1)

data['pred']=0

program_starts = time.time()

for x in range(500):

df_copy=df.iloc[-2000+x:-501+x].copy()

data.iloc[-503+x,8]=deep_neural_network(df_copy, model)

now = time.time()

print("The backtest took {0} minutes".format((now - program_starts)/60.0))

df_confusion = pd.crosstab(data.direction.iloc[-503:-2], data.pred.iloc[-503:-2])

return df_confusion

# df_confusion=neural_network_backtest("GBPUSD", 1440)As you can see I made a copy of the dataframe in the above code so as to avoid overwriting the data again and again. Now I again ran the backtest on GBPUSD 240 minutes which is the H4 timeframe. It again took 30 minutes and the accuracy rate this was 49%. So this tensorflow model is no more better than a fair coin. We can as well flip the coin and decide whether we have a bullish candle or a bearish candle.

Epoch 44/50

46/46 [==============================] - 0s 986us/step - loss: 0.0053 - accuracy: 1.0000

Epoch 45/50

46/46 [==============================] - 0s 1ms/step - loss: 0.0053 - accuracy: 1.0000

Epoch 46/50

46/46 [==============================] - 0s 1ms/step - loss: 0.0053 - accuracy: 1.0000

46/46 [==============================] - 0s 1ms/step - loss: 0.0052 - accuracy: 1.0000

Epoch 48/50

46/46 [==============================] - 0s 1ms/step - loss: 0.0053 - accuracy: 1.0000

Epoch 49/50

Epoch 50/50

The backtest took 27.7540943980217 minutes

pred 0 1

0.0 115 127

1.0 130 129

>>> (115+129)/(115+127+130+129)

0.4870259481037924

>>> 115+129

244

>>> 115+127+130+129

501Right now we should avoid trading with this tensorflow neural network we must work again and come up with a better neural network model. Did you know Deep Learning finally defeated the Go champion Lee Sadol. We can do better so stay tuned for the next version of this neural network model.

Deep learning is not a panacea. Financial markets are highly sentiment based and political now a days. I don’t think that we will be able to build a highly accurate deep learning model that can make predictions about the next candle. If you have read the book, The Man Who Solved The Market, you will be surprised to know that the machine learning model they use has just 51% accuracy and making them billions of dollars. You should know that with a good money management system you can even make a lot of money with a trading system that has got 50% accuracy.